- Revenue Cycle Management

- COVID-19

- Reimbursement

- Diabetes Awareness Month

- Risk Management

- Patient Retention

- Staffing

- Medical Economics® 100th Anniversary

- Coding and documentation

- Business of Endocrinology

- Telehealth

- Physicians Financial News

- Cybersecurity

- Cardiovascular Clinical Consult

- Locum Tenens, brought to you by LocumLife®

- Weight Management

- Business of Women's Health

- Practice Efficiency

- Finance and Wealth

- EHRs

- Remote Patient Monitoring

- Sponsored Webinars

- Medical Technology

- Billing and collections

- Acute Pain Management

- Exclusive Content

- Value-based Care

- Business of Pediatrics

- Concierge Medicine 2.0 by Castle Connolly Private Health Partners

- Practice Growth

- Concierge Medicine

- Business of Cardiology

- Implementing the Topcon Ocular Telehealth Platform

- Malpractice

- Influenza

- Sexual Health

- Chronic Conditions

- Technology

- Legal and Policy

- Money

- Opinion

- Vaccines

- Practice Management

- Patient Relations

- Careers

‘Think about the hype’ - AI holds disruptive potential for health care

Physician experts say artificial intelligence has promise for primary care, but doctors must think critically as they integrate it into patient care.

© phonlamaiphoto - stock.adobe.com

Artificial intelligence (AI) holds significant potential to improve health care efficiency and reduce clinician burden, but it must be implemented responsibly with careful consideration of ethical, regulatory, and practical implications, according to speakers at a session on the topic at the American College of Physicians Internal Medicine Meeting 2024.

“Responsible AI means that a human has to be in the loop to always double check these things, but nonetheless it does decrease the cognitive burden,” said Deepti Pandita MD, FACP, FAMIA, CMIO, and VP of Informatics, University of California, Irvine. “Make sure you have a governance process around this, mainly because things can go awry very quickly.”

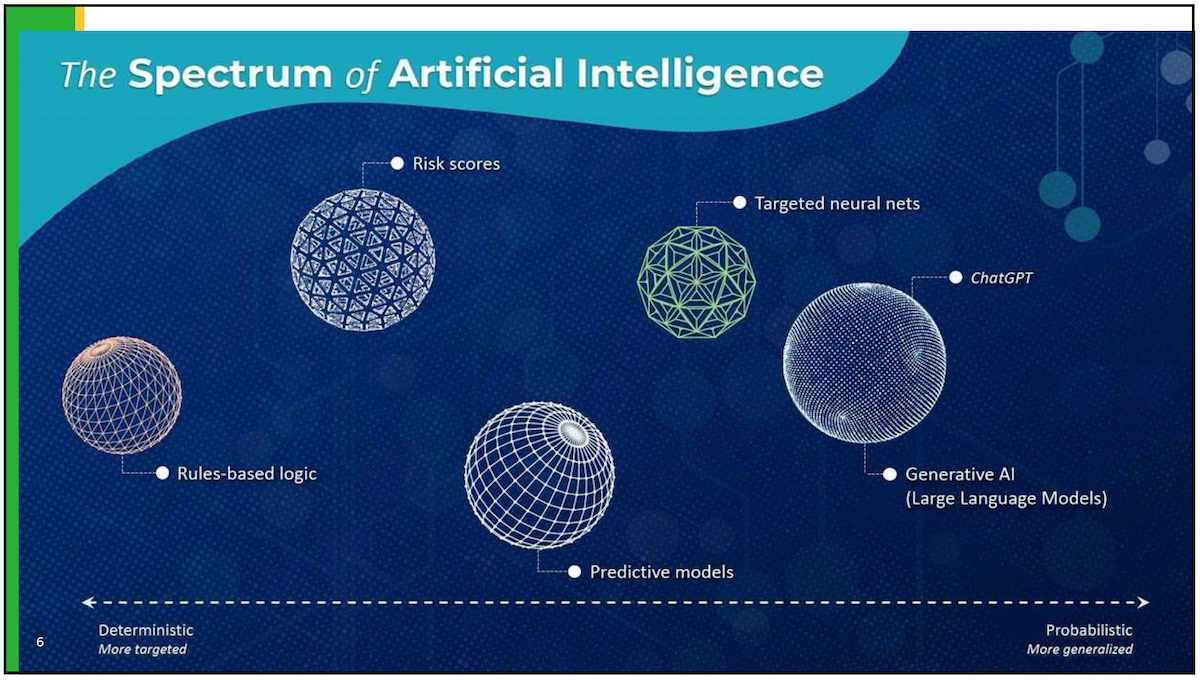

AI has been under development in health care for nearly 40 years, Pandita said. This has evolved from predictive models, neural networks, and now to the incorporation of large language models. With the latest generative AI developments, the list of potential applications has greatly expanded, including translation, documentation, billing, nursing documentation, medical advice, message responses, and more. These applications aim to reduce the administrative burden on clinicians, she noted.

Deepti Pandita MD, FACP, FAMIA, CMIO, presented this slide during her talk at the American College of Physicians Internal Medicine Meeting 2024. Pandita was part of a panel discussing how artificial intelligence will affect the future of medicine.

© Deepti Pandita MD, FACP, FAMIA, CMIO

In her presentation, Pandita provided several practical examples of AI implementation, such as using AI-generated responses in clinical settings, automation of administrative tasks like generating patient letters, and auto-generation of chat summaries in emergency departments. In a sample of nearly 130 individuals, Pandita noted that the automatic responses saved three minutes on average per day. This time adds up, she said, and the use of the chat feature reduced cognitive burden, which is traditionally associated with burnout.

“Primary care seems to save a lot more time than specialty care,” she added. In their experiment, the time saved per message reached nearly 86% for primary care compared with almost 50% for specialty care. Of the draft content generated by the AI, nearly three-fourths was found in the final message. "The studies have shown that the response is more empathetic and that's my practical experience as well," she added.

Although AI can seem magical, it's essential to remember that it's fundamentally based on mathematical algorithms, said Ivana Jankovic, MD, adjunct clinical assistant professor in the Division of Endocrinology, Diabetes and Clinical Nutrition, Oregon Health & Sciences University. Understanding this helps in critically evaluating and utilizing AI tools in clinical practice, she advised.

Ivanka Jankovic, MD, presented this slide during her talk at the American College of Physicians Internal Medicine Meeting 2024. Jankovic was part of a panel discussing how artificial intelligence will affect the future of medicine.

© Ivanka Jankovic, MD

“Think about the hype. Don't buy into it. Think critically about how you can use it for your patients,” Jankovic said. “You've got to think about not just the output of an algorithm, but what you're going to do with that output. That's actually one of the most important steps, and sometimes we overlook it.”

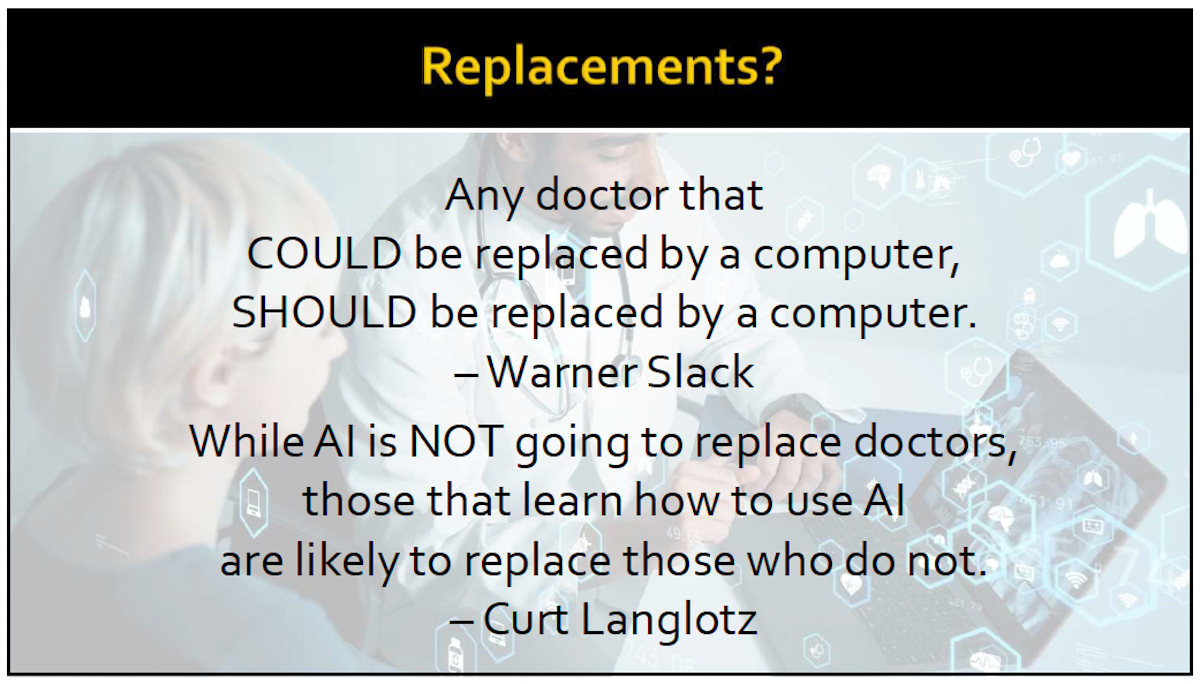

Other considerations should include evaluating the output of algorithms, determining the appropriateness for specific cases, and assessing potential biases and limitations. There are already several types of algorithms encountered daily, she noted. Moreover, safety is paramount when using AI in clinical practice, she noted. Physicians should ensure that AI tools are used appropriately, especially regarding patient privacy and sensitive data. Additionally, AI should complement clinical judgment rather than replace it entirely.

As an endocrinologist, Jankovic compared several examples of different algorithms currently used for diabetes. In these cases, she examined algorithms that included inputting data and others based off weight for determining the insulin dose. She then put these side by side with a reply from ChatGPT. The results were different in each case and differed from research findings. In this case, the AI recommended a dose of insulin that was far too high compared with other measures. Applications such as this, were not encouraged, and could lead to poor patient care; however, Jankovic did use an example of using AI to summarize physician notes into patient friendly communication. This application resulted in a highly patient friendly communication for discharge instructions that provided substantial value while reducing physician burden.

“It did a really good job of breaking this down for the patient. Not only did it do this but without me asking, it gave tips,” Jankovic said. “It can make your job faster and easier. It can take things to the level of your patient without you expending effort for it.”

Building off this, the final speaker, Jonathan H. Chen, MD, PhD, assistant professor of medicine at the Stanford Center for Biomedical Informatics Research, introduced his talk using an AI generated video of himself. He noted that AI has been evolving so rapidly that even over the course of 2023 it went from barely passing medical tests to passing with a higher grade than many physicians.

Jonathan H. Chen, MD, PhD, presented this slide during his talk at the American College of Physicians Internal Medicine Meeting 2024. Chen was part of a panel discussing how artificial intelligence will affect the future of medicine.

© Jonathan H. Chen, MD, PhD

“Now they handily can pass the exams. Not even close. Better than probably your average doctor would,” said Chen. “Chat GPT4 handily passes open-ended reasoning exam. It's not even close anymore.”

Although there are many benefits, each speaker warned against false information. These hallucinations or confabulations are one of the main drawbacks with the use of AI broadly in medicine. To overcome this, it is better to give the AI source material, such as guidelines or other material, and then run the queries off these resources. All speakers advised not to blindly rely on AI-generated information without verifying its accuracy, especially in critical medical decision-making. To demonstrate this, Chen easily had an AI provide false information, which included fake PubMed references.

“It's just stringing together author names. The title works itself. That looks like a citation,” said Chen. “There’s no sense of truth or knowledge. Just trying to make it believable. This is dangerous.”